Explainable AI (XAI) is transforming fraud detection by making AI decisions clear and understandable. Here's how it helps:

- Better Fraud Detection: XAI-powered systems identify fraud more accurately and reduce false positives (e.g., Anti-Money Laundering false positives dropped from 30% to 5%).

- Transparency: Tools like SHAP and LIME explain why transactions are flagged, helping teams focus on real threats and meet regulatory requirements.

- Faster Investigations: Clear AI insights speed up fraud investigations, cutting review times from hours to minutes.

- Adaptability: Explainable neural networks and meta-learning allow AI to adjust to new fraud patterns while staying transparent.

| Feature | Traditional Systems | XAI Systems |

|---|---|---|

| False Positive Rate | 30% | 5% |

| Decision Transparency | Minimal | Clear and Detailed |

| Investigation Time | Hours per case | Minutes per case |

| Adaptation to New Threats | Limited | Dynamic Adjustment |

Explainable AI and Fraud Detection

Definition of Explainable AI (XAI)

Explainable AI (XAI) focuses on making AI systems more transparent by clarifying how decisions are made. Unlike traditional "black box" models, XAI provides detailed insights into the reasoning behind decisions, particularly in fraud detection [1].

Financial institutions benefit from XAI by being able to:

- Understand the reasoning behind fraud alerts, including transaction details.

- Detect and address biases or inaccuracies in the detection models.

- Make precise adjustments to enhance detection accuracy.

Why Transparency Matters in Fraud Detection

Fraud detection often suffers from inefficiencies in traditional systems, which lack clarity in decision-making.

"Explainable AI helps security teams understand threats, improving detection and response strategies." - MixMode, 2024-09-05 [4]

XAI has made a noticeable impact in this field. For instance, AI systems using explainable methods have cut false positive rates in Anti-Money Laundering (AML) detection from 30% to just 5% [2]. This improvement not only enhances detection but also simplifies operations, benefiting both institutions and their customers.

| Aspect | Traditional Systems | XAI-Enhanced Systems |

|---|---|---|

| False Positive Rate | 30% | 5% |

| Decision Transparency | Minimal | Clear and Detailed |

| Investigation Efficiency | Slow and Complex | Faster and Accurate |

| Regulatory Compliance | Basic | Advanced |

XAI's transparency is critical in several areas:

- Regulatory Compliance and Trust: Clear explanations for fraud alerts help meet regulatory standards and foster trust among customers, regulators, and partners [1].

- Operational Improvements: Fraud investigation teams can quickly analyze alerts, speeding up case resolution and improving accuracy [1].

Adopting XAI techniques makes AI systems more understandable and efficient, creating a secure and trustworthy environment for all involved. Next, we'll dive into tools like SHAP and LIME that bring this transparency into action.

Methods for Using Explainable AI in Fraud Detection

SHAP for Fraud Prediction

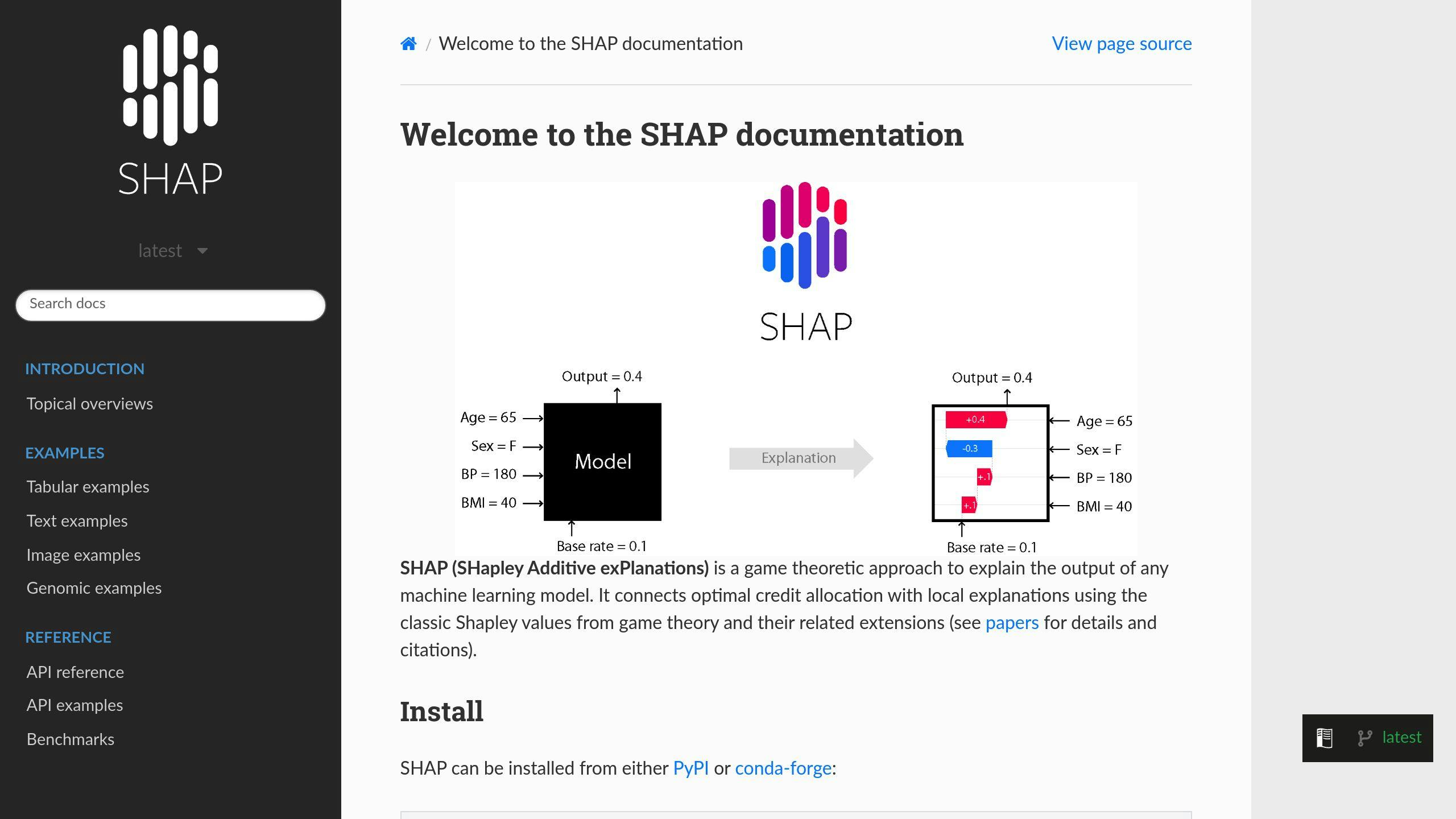

SHAP (SHapley Additive exPlanations) breaks down AI decisions to show how specific transaction features impact fraud alerts. It helps make AI-driven fraud detection more transparent by highlighting the contribution of each feature.

| Transaction Feature | SHAP Impact Level |

|---|---|

| Unusual Location | High - Requires Immediate Review |

| Transaction Timing | Medium - Needs Secondary Check |

| Purchase Amount | Variable - Context-Dependent |

| Merchant Category | Low - Background Factor |

"Explainable AI transforms what would otherwise be inscrutable mathematical decisions into clear, actionable insights that help organizations combat fraud more effectively." [1]

While SHAP gives a broad view of how features contribute to fraud alerts, LIME takes a different approach, focusing on explaining individual predictions.

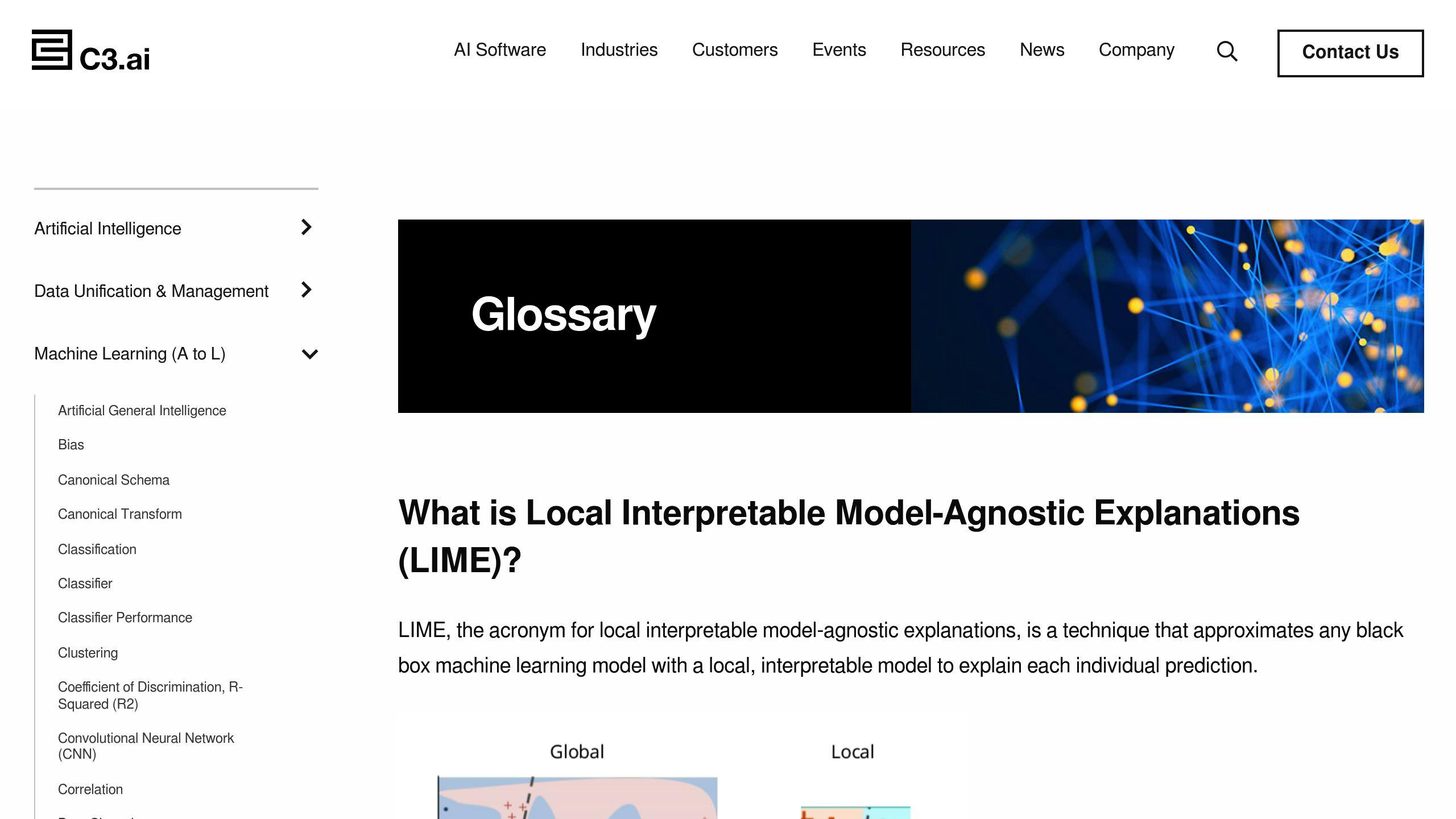

LIME for Individual Fraud Predictions

LIME (Local Interpretable Model-agnostic Explanations) simplifies AI decisions for specific cases. It creates easy-to-understand models that reveal which factors triggered a fraud alert, helping investigators quickly identify and prioritize high-risk cases.

To get the most out of SHAP and LIME, financial institutions should focus on:

- Adding real-time analysis to their systems

- Training teams to understand and use SHAP and LIME outputs

- Regularly updating AI models to reflect new fraud patterns

- Combining insights from both methods for a more thorough review process

Examples of Explainable AI in Fraud Detection

Explainable AI in Financial Institutions

Banks are using Explainable AI (XAI) to create advanced fraud detection systems that not only identify suspicious activities but also provide clear explanations for their findings. This approach helps reduce false positives, saving time and improving accuracy. Tools like SHAP (SHapley Additive exPlanations) play a key role by allowing banks to prioritize alerts based on their importance.

One standout improvement is the use of dynamic rule generation. Unlike older systems that depend on static rules, XAI-powered solutions can adapt and create filters tailored to specific customer groups.

| Implementation Area | Traditional Approach | XAI-Enhanced Results |

|---|---|---|

| Alert Processing | Manual review of all alerts | Alerts prioritized using SHAP values |

| False Positive Rate | 30% average | Reduced to 5% |

| Investigation Time | Hours per case | Minutes per case |

| Decision Transparency | Limited explanation | Detailed feature analysis |

Explainable AI in Insurance Fraud Detection

Insurance companies have revamped their claim review processes with XAI, enabling adjusters to understand why certain claims are flagged as potentially fraudulent. This clarity leads to quicker investigations and more accurate decisions.

XAI supports automated claim prioritization, provides detailed insights for flagged cases, and speeds up the processing of legitimate claims. It’s especially helpful for handling complex cases that involve multiple factors, allowing adjusters to zero in on the most critical details [3].

When the system detects suspicious patterns, it highlights the key factors involved:

| Alert Factor | XAI Insight Provided |

|---|---|

| Claim Timing | Deviations from typical patterns |

| Documentation | Irregularities in submitted materials |

| Claimant History | Unusual frequency or patterns of claims |

These examples show how XAI is changing fraud detection today and hint at even greater possibilities for the future.

Future Developments in Explainable AI for Fraud Detection

Neural Networks with Explainability

Tools like SHAP and LIME laid the groundwork, but the next step in AI fraud detection is the rise of explainable neural networks. These systems improve detection by analyzing patterns in real-time and adjusting to threats dynamically - offering a significant upgrade over older methods.

Explainable neural networks stand out by cutting down false positives and making decisions easier to understand. For example, while traditional systems detect fraud in only about 2% of flagged transactions [2], these advanced networks achieve better accuracy through refined pattern recognition [1].

| Feature | Traditional Neural Networks | Explainable Neural Networks |

|---|---|---|

| Decision Transparency | Black box decisions | Clear, detailed reasoning |

| Processing Speed | Standard processing | Real-time analysis |

| False Positive Rate | High | Much lower |

| Adaptation to New Threats | Limited | Dynamic adjustment |

Meta-Learning and Explainable AI

Meta-learning is changing the game by allowing AI systems to quickly adapt to new fraud tactics. When paired with explainable AI, it creates a powerful combination of adaptability and transparency [1].

These systems learn from new fraud patterns without needing manual updates, maintain high accuracy, and clearly explain their decisions. This means faster, better-informed responses. As one expert puts it:

"The real power of these explainability techniques lies in their ability to bridge the gap between complex AI models and human understanding." [1]

Financial institutions using these technologies can process massive amounts of real-time data while keeping audit trails clear for regulatory needs [3]. By blending meta-learning with explainable AI, they achieve stronger fraud detection and maintain transparency.

These developments are setting a new benchmark for fraud detection, offering faster, smarter, and more transparent solutions for the future.

sbb-itb-f88cb20

Related video from YouTube

AI Tools for Fraud Detection

With the rise of explainable neural networks and meta-learning, choosing the right AI tools for fraud detection has never been more important. Today’s solutions need to handle massive data loads while keeping their decision-making processes transparent.

Finding the Right Tools with Best AI Agents

Best AI Agents (https://bestaiagents.org) simplifies the process of finding AI tools tailored for fraud detection. This platform highlights tools that use technologies like SHAP and LIME, which are designed to ensure transparency and accuracy in decision-making.

To be effective, fraud detection tools should combine these key features:

- Real-time transaction analysis and alerts

- Transparent decision-making processes

- Automatic updates to tackle new fraud patterns

These systems can process thousands of transactions every second [3], all while maintaining a clear audit trail. Transparency is critical, allowing fraud analysts to quickly validate alerts and meet compliance requirements.

AI-powered tools have also proven their ability to cut down false positives significantly. For instance, they’ve reduced false positives from 30% to just 5% compared to older methods [2]. This leap in accuracy comes from combining advanced pattern recognition with clear, explainable reasoning.

When evaluating fraud detection tools, organizations should look for solutions that offer:

- Real-time analysis

- Transparent fraud alert explanations

- Continuous updates for new fraud patterns

- Seamless integration with existing security systems

Conclusion: Explainable AI and Fraud Detection

Explainable AI is reshaping fraud detection by merging powerful machine learning tools with transparency. Using techniques like SHAP and LIME, these systems not only achieve impressive accuracy but also make their decision-making processes clear and understandable.

Financial institutions have seen measurable benefits. For instance, XAI-powered systems have cut down false positives significantly, offering detailed insights into the transaction patterns that trigger alerts [1]. This clarity speeds up investigations and ensures compliance with regulations.

The integration of explainable neural networks and meta-learning has taken fraud detection to the next level. These systems can process enormous volumes of transaction data, adapt to new threats, and still provide transparency in their reasoning [1] [3]. This approach brings three major benefits:

- Clear, transparent decisions that enhance trust and meet regulatory requirements

- Quick adjustments to new types of fraud

- Detailed reasoning for each flagged transaction

"Explainable AI transforms what would otherwise be inscrutable mathematical decisions into clear, actionable insights that help organizations combat fraud more effectively." [1]

The adoption of explainable AI is a game-changer for financial security. By combining cutting-edge machine learning with transparency, these systems not only detect fraud more effectively but also maintain the trust and understanding needed for lasting success [1] [3].

This shift highlights the growing importance of explainability in building reliable fraud detection solutions that can adapt to new challenges while staying fully transparent [1] [4].

FAQs

How does AI-driven transaction monitoring potentially reduce false positives?

AI-driven transaction monitoring helps cut down on false positives by using real-time pattern recognition and context-based rules. Unlike older systems, AI tailors its analysis to specific customer segments within a financial institution’s portfolio [2]. This allows it to apply multiple filters at once, leading to:

- Improved threat detection

- Enhanced recognition of patterns across large transaction datasets

- Rules that adjust dynamically as new patterns emerge

For example, tools like SHAP analyze transaction behaviors and offer clear insights into potential fraud. This enables security teams to concentrate on actual threats instead of wasting time on false alarms.