- Deforum: Enhances lighting, textures, and CGI integration for faster VFX production.

- Topaz Video AI: Upscales and cleans video quality with real-time feedback.

- D-ID Creative Reality Studio: Creates lifelike digital humans and seamless CGI blending.

- Omniverse: Enables team collaboration with instant rendering and GPU acceleration.

- Weta Digital's AI Tools: Delivers hyper-realistic character animation for blockbuster films.

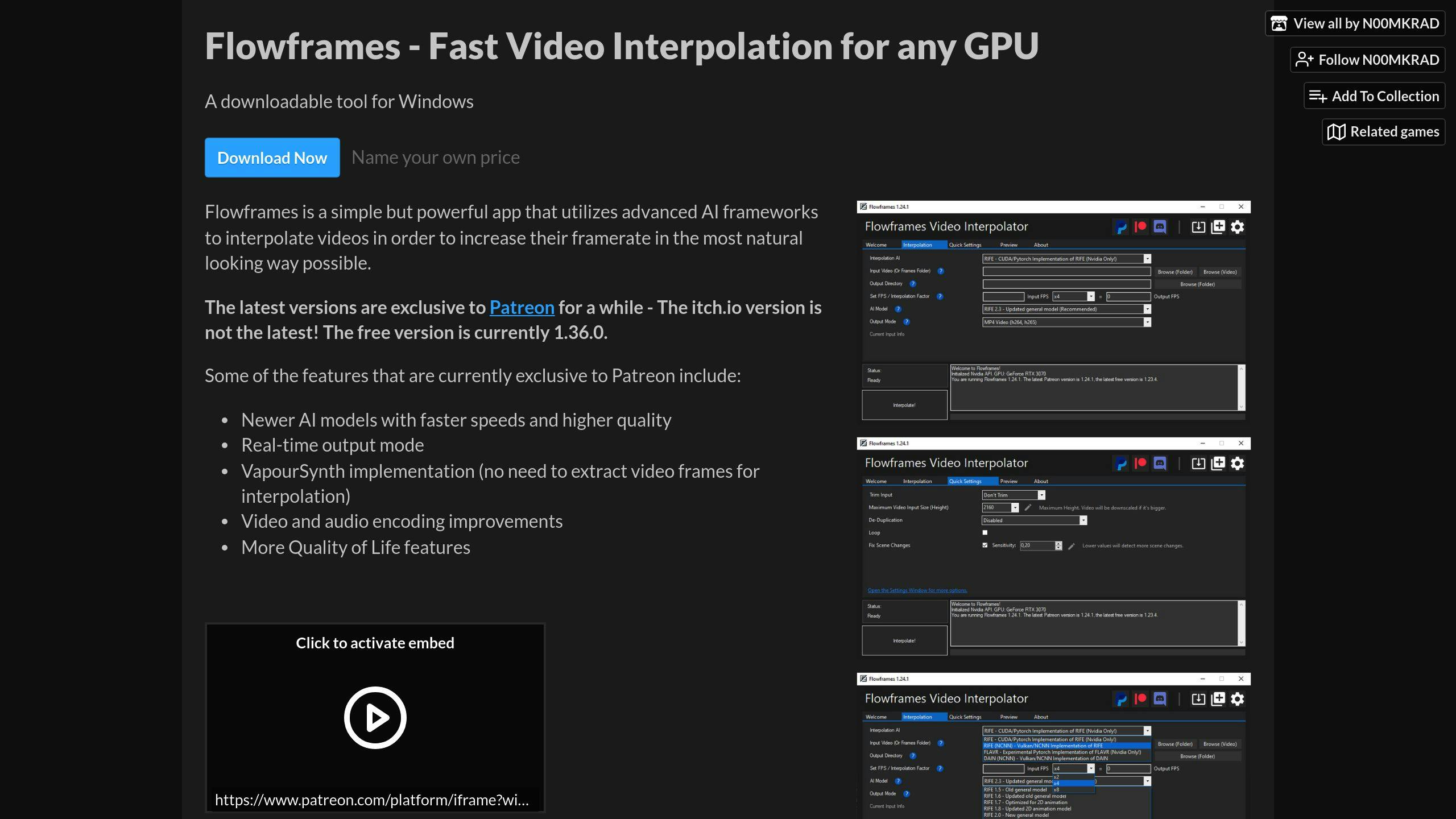

- Flowframes: Focuses on frame interpolation for smoother motion and quick rendering.

- Midjourney: Generates visuals from text prompts for creative and fast visual effects.

Quick Comparison

| Tool | Primary Use | Key Features | Target Users |

|---|---|---|---|

| Deforum | Real-Time Animation | Lighting, texture, CGI integration | Artists & Small Teams |

| Topaz Video AI | Video Upscaling & Cleanup | Noise reduction, quality adjustments | Video Professionals |

| D-ID Creative Studio | Digital Human Creation | Face animation, CGI blending | Media Experts |

| Omniverse | 3D Collaboration & Rendering | GPU acceleration, team collaboration | Production Studios |

| Weta Digital Tools | High-End VFX | Motion capture, detailed animations | Film Studios |

| Flowframes | Frame Interpolation | Smooth motion, 8K support | Indie Filmmakers |

| Midjourney | Text-to-Image Generation | Visual creation from text prompts | Creative Teams |

These tools cater to different needs, from indie creators to large film studios, making advanced VFX production faster and more accessible.

Generative VFX with Runway Gen-3 | Create AI Visual Effects

1. Deforum

Deforum is an AI-powered tool designed for real-time visual effects in entertainment and media. It focuses on improving key aspects like lighting, color balance, and texture, all while processing visuals instantly.

This tool is particularly effective for tasks like de-aging, creating virtual environments, and integrating CGI seamlessly. By automating complex effects, Deforum meets the industry's need for quicker and more cost-effective VFX production.

It works smoothly with existing VFX software, allowing for fast project turnarounds without disrupting workflows. While it does require significant computational power, the savings in labor and processing time make it a worthwhile investment. Its AI-driven feedback system also lets artists make adjustments on the spot, streamlining the creative process.

To get the most out of Deforum, professionals should ensure they have the necessary resources, incorporate it into their workflows effectively, and closely monitor output quality. Skilled users with a strong grasp of VFX principles and AI tools can achieve top-tier results.

Deforum's real-time processing capabilities set it apart as a powerful tool for modern VFX. Up next, we’ll look into another cutting-edge tool, Topaz Video AI.

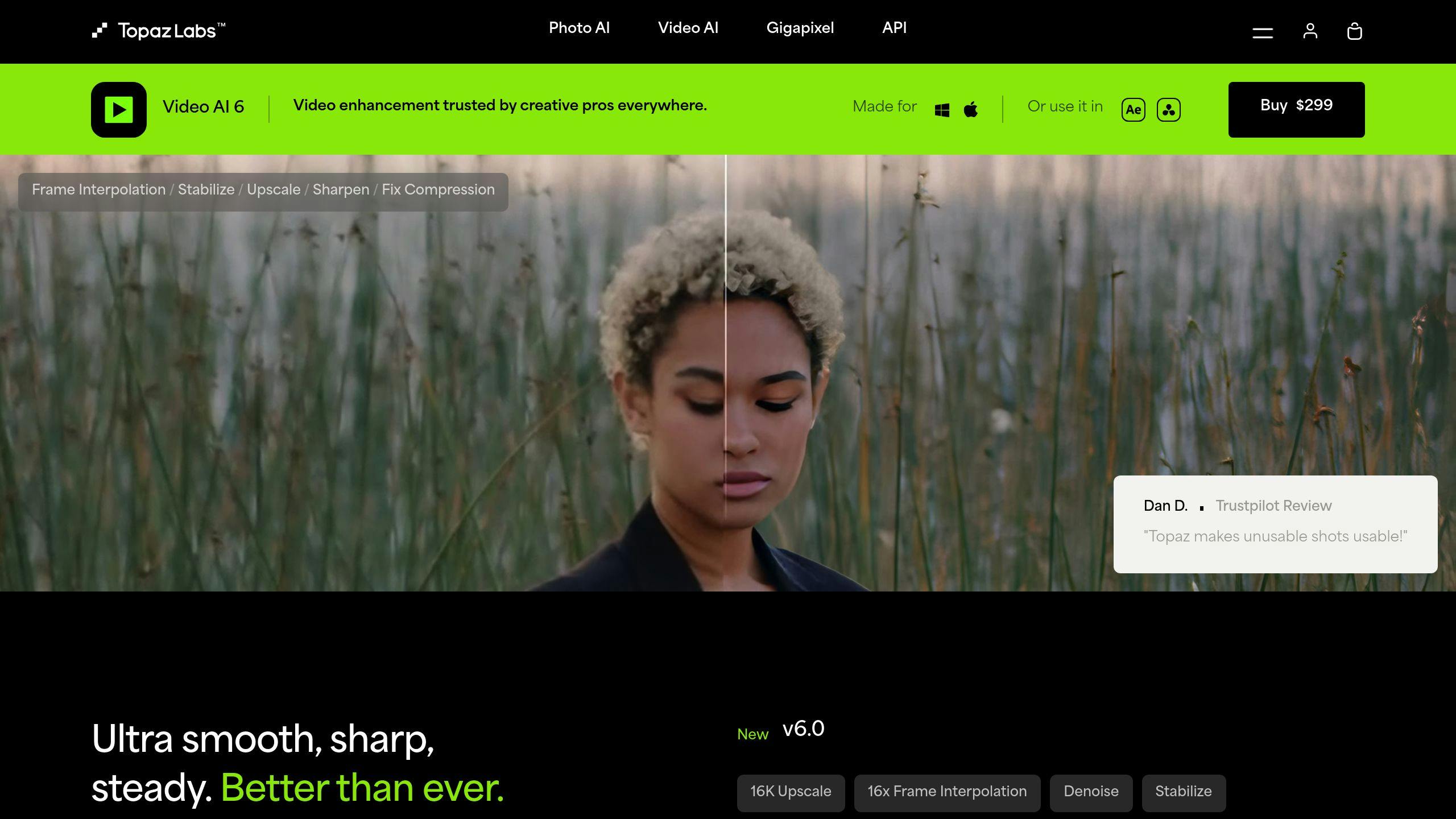

2. Topaz Video AI

Topaz Video AI is a powerful tool designed for improving video quality in real-time, specifically tailored for VFX workflows. It handles tasks like upscaling, noise reduction, and enhancing overall fidelity, all while meeting demanding industry expectations.

What sets this tool apart is how easily it integrates with existing VFX processes. By blending smoothly into workflows, it not only boosts rendering speed but also elevates video quality. Its real-time processing capabilities address the industry's constant demand for fast and top-tier production.

A standout feature of Topaz Video AI is its ability to automate intricate video enhancement tasks without sacrificing professional quality. This reduces both production time and costs, making it a game-changer for VFX teams dealing with tight schedules.

However, to get the best results, the tool requires robust hardware and experienced professionals who can align AI-generated outputs with creative objectives. This balance ensures efficiency while maintaining artistic integrity.

Up next, we’ll dive into how D-ID Creative Reality Studio pushes the boundaries of creativity with AI.

3. D-ID Creative Reality Studio

D-ID Creative Reality Studio is an AI-driven tool designed to transform how visual effects are created in real time. This platform specializes in producing lifelike digital humans and blending CGI elements seamlessly into live-action footage, making it a go-to for modern entertainment production.

One of its standout features is real-time processing, which allows on-set adjustments instantly. This shifts the traditional post-production workflow into a more dynamic, interactive experience. Powered by advanced deep learning algorithms, the platform supports facial manipulation and character animation. It can handle tasks like de-aging actors, creating photorealistic backdrops, and generating natural facial expressions and movements.

D-ID works smoothly with industry-standard tools, fitting into existing production pipelines without causing disruptions. This compatibility means VFX teams can stick to their usual workflows while using AI to handle complex tasks like motion capture and character creation more efficiently.

By automating time-consuming processes, D-ID helps teams meet tight deadlines without sacrificing quality. However, given its capabilities in deepfake and facial manipulation, it's crucial to use this technology responsibly and within controlled environments [1][4].

As AI and virtual production continue to advance, D-ID evolves alongside them, offering creators faster and more precise ways to achieve high-quality visual effects [2]. Next, we’ll dive into how Omniverse uses AI to improve collaboration in real-time VFX workflows.

4. Omniverse

NVIDIA's Omniverse is reshaping how real-time visual effects are created, leveraging GPU acceleration and AI-powered tools. This platform supports smooth collaboration and instant rendering, streamlining what used to be time-consuming workflows.

With its real-time processing, Omniverse allows creators to instantly visualize complex scenes. Artists can quickly tweak lighting, textures, and other effects, creating a fast feedback loop that's especially useful in virtual production.

One standout feature is its integration with popular industry tools like Maya, Blender, and Unreal Engine. By connecting seamlessly with these programs, Omniverse complements existing workflows while introducing AI-powered enhancements - without forcing teams to change their preferred tools.

The results are impressive. Omniverse slashes rendering time from hours to real-time, lowers production costs by up to 70%, and boosts workflow efficiency by 90%. Teams can collaborate simultaneously on complex scenes, all thanks to GPU acceleration and the elimination of rendering delays.

For example, during the production of The Matrix Resurrections, Omniverse enabled real-time visualization of intricate environments. This not only sped up post-production but also gave creators more control over their vision. Its scalable design, powered by NVIDIA's advanced architecture, ensures it can handle even the most demanding scenes without sacrificing quality.

NVIDIA continues to refine Omniverse, expanding its AI tools and integrations to push the limits of real-time visual effects. Its ability to manage complex environments instantly while delivering top-tier results has made it a key player in modern VFX workflows.

Up next, we'll dive into how Weta Digital's AI tools are advancing these capabilities for large-scale productions.

sbb-itb-f88cb20

5. Weta Digital's AI Tools

Weta Digital's AI tools are reshaping how real-time effects are created, especially in blockbuster filmmaking. With real-time visualization, directors and artists can turn what used to be post-production work into interactive, on-set creative sessions.

These tools are designed for high-end cinematic productions, focusing on hyper-realistic character animation, dynamic environments, and instant motion capture processing. Unlike general-purpose platforms like Omniverse, Weta Digital's tools are built specifically to handle the complexities of major film projects.

Using deep learning, they automate challenging tasks like facial animation, saving both time and resources. For example, during the production of Avatar, Weta Digital's tools delivered detailed facial animations, capturing subtle emotions while significantly reducing production time. Their system also allows seamless collaboration between departments, which is essential for projects involving intricate character work and elaborate visual effects.

Though these tools demand considerable computational power and expertise, their influence on modern filmmaking can't be ignored. Weta Digital continues to push their AI capabilities further, improving real-time rendering quality and exploring more uses in virtual production.

While Weta Digital's tools dominate large-scale productions, other AI tools, such as Flowframes, focus on specific tasks like frame interpolation to optimize visual effects workflows.

6. Flowframes

Flowframes is an AI-powered tool designed for real-time frame interpolation and video processing, delivering smooth motion and high-quality visuals. It's particularly useful for improving real-time visual effects (VFX) workflows where speed and accuracy are critical.

One of its standout features is the ability to process high-resolution content, supporting up to 8K video in real-time. This makes it a valuable asset for live broadcasts and time-sensitive productions where quick rendering is essential.

The AI behind Flowframes ensures smooth motion without the typical artifacts seen in older methods, maintaining visual consistency. This is especially handy for projects that involve adjusting frame rates, like converting 24fps footage to 60fps for streaming platforms.

Other key functions include real-time denoising and motion interpolation, which save time by allowing quick tweaks and instant results. These features are particularly beneficial for live events and tight production schedules.

Flowframes also integrates easily with popular editing software, making it a natural fit for existing workflows. Its efficient design keeps hardware requirements low, making it accessible even for smaller studios.

Here’s a quick look at its main features:

- Real-time processing for immediate results

- Support for 8K resolution, ideal for professional projects

- Advanced frame interpolation for smoother motion

- Automated video denoising for cleaner visuals

- Easy integration into existing editing software

While it doesn't offer the extensive features of tools like Omniverse or Weta Digital's suite, Flowframes excels in focused tasks, making it an effective choice for studios looking to boost their real-time VFX capabilities.

Next up, we’ll dive into how Midjourney uses AI to create visually striking effects for creative projects.

7. Midjourney

Midjourney uses deep learning to turn text prompts into detailed visual effects, making it a go-to tool for real-time VFX production. Its AI-driven system allows creators to generate visuals quickly and fine-tune them on the spot, fitting smoothly into existing workflows.

What sets Midjourney apart is its ability to create custom visuals directly from text prompts. This speeds up production, minimizes delays, and encourages creativity during filming or editing.

Here’s what makes it stand out in VFX workflows:

- Instant previews: Quickly see and adjust visuals in real time.

- AI-generated detail: Produces highly refined visual elements.

- Seamless integration: Works effortlessly with existing VFX pipelines.

- Customizable visuals: Offers creative control through text prompts.

Midjourney is budget-friendly, with subscription plans suitable for both solo creators and large studios. Its active user community shares tips and techniques, helping professionals get the most out of the tool. The platform’s real-time rendering capabilities make it easier for teams to produce and tweak visuals without slowing down production.

Although similar to other AI tools, Midjourney’s focus on text-to-visual generation gives it an edge. Its ongoing updates are tailored to meet the demands of modern VFX production, blending efficiency with creative freedom to improve workflows.

Midjourney is a prime example of how AI is reshaping VFX production, opening up new possibilities for creators and studios.

Comparison Table

Here's a quick breakdown of how different AI tools are used in real-time VFX production, highlighting their main features and target audiences:

| Tool | Primary Use | Key Features | Target Users |

|---|---|---|---|

| Deforum | AI Animation for Motion Design | • AI animation • Custom style controls • Instant preview |

Artists & Small Teams |

| Topaz Video AI | Video Upscaling & Cleanup | • Smart upscaling • Noise reduction • Quality adjustments |

Video Professionals |

| D-ID Creative Reality Studio | Digital Human Creation | • Face animation • Virtual presenters • Content security |

Ad Agencies & Media Experts |

| Omniverse | 3D Virtual Worlds & Simulations | • Team collaboration • Physics simulation • GPU acceleration |

Production Studios |

| Weta Digital's AI Tools | High-End VFX | • CGI integration • Character animation • Studio-grade rendering |

Film Studios |

| Flowframes | Frame Enhancement | • AI-powered smoothing • Color adjustments • Video optimization |

Indie Filmmakers |

| Midjourney | Text-to-Image Generation | • Visual creation • Style customization • Quick iterations |

Creative Teams |

Each tool addresses specific challenges within the VFX pipeline, offering a range of solutions from beginner-friendly options like Deforum to advanced tools like Weta Digital's suite for large-scale productions [1][3].

Choosing the right tool depends on factors like project size, budget, and workflow needs. For example, D-ID is ideal for creating lifelike digital humans, while Omniverse supports large teams building complex 3D environments [3][4].

These tools show how AI is reshaping visual effects, making advanced techniques more accessible and reducing both production time and costs [1][4]. Whether you're an indie creator or a big studio, there's an option tailored to your needs.

Conclusion

The comparison table highlights how different AI tools address specific challenges, showcasing their impact on VFX production. These tools are reshaping workflows by making advanced techniques faster, more accessible, and cost-efficient.

AI can now handle complex tasks like motion capture and rendering, cutting down production time and expenses. At the same time, it opens up new creative opportunities, such as developing lifelike digital humans and dynamic environments. This shift has brought high-quality VFX tools within reach for smaller studios and independent creators, leveling the playing field in the industry [1][5].

Tools like Midjourney and D-ID provide creators with powerful options, from generating visuals with text prompts to crafting realistic digital humans. Virtual production technologies, on the other hand, allow filmmakers to build entire digital worlds in real time, pushing the boundaries of what’s possible in VFX [4].

To stay relevant, professionals need to integrate AI tools into their workflows while tackling ethical concerns, especially around transparency in AI-generated content [2][3]. Rather than replacing human creativity, these technologies enhance it, allowing artists to focus more on innovative storytelling while meeting production deadlines.

Trends like virtual production and augmented reality point to even more advanced applications of AI in VFX. From immersive storytelling to refined character animation, these tools continue to raise the bar for visual effects. As AI technology evolves, its role in transforming VFX production across all scales remains a key driver of progress in the industry [1][5].

FAQs

Can AI create VFX?

AI tools are now an essential part of producing advanced visual effects by automating tasks that used to take a lot of time.

"AI has brought significant advancements to animation and visual effects in the film industry. It can automate tasks like motion capture, rendering, and even character creation." - Carmatec, AI in Media and Entertainment Complete Guide [1]

Here are a few ways AI is transforming VFX:

- Character Animation and Real-Time Rendering: AI improves character animation and real-time rendering, making movements more lifelike and allowing directors to tweak scenes instantly during filming. This has changed how post-production is traditionally done [4].

- Automated Post-Production: AI tools simplify post-production by handling tasks like generating realistic visuals that capture human movements and expressions. This makes high-quality VFX more accessible for smaller studios and independent creators [1][4].

However, while AI boosts both productivity and creativity, it also brings ethical concerns, especially with misuse in areas like deepfake technology. Ensuring responsible use and maintaining professional oversight is key to protecting artistic vision and quality.

From tools like Midjourney, which generates visuals from text, to Omniverse's real-time collaboration features, AI is reshaping how VFX workflows operate. These advancements present exciting possibilities and challenges for creators in the industry.